AI provides face read-out of genetic diseases

Experts in artificial intelligence (AI) have created another problem for bioethicists and data protection specialists: their algorithm has learned to identify people with rare genetic syndromes from facial images.

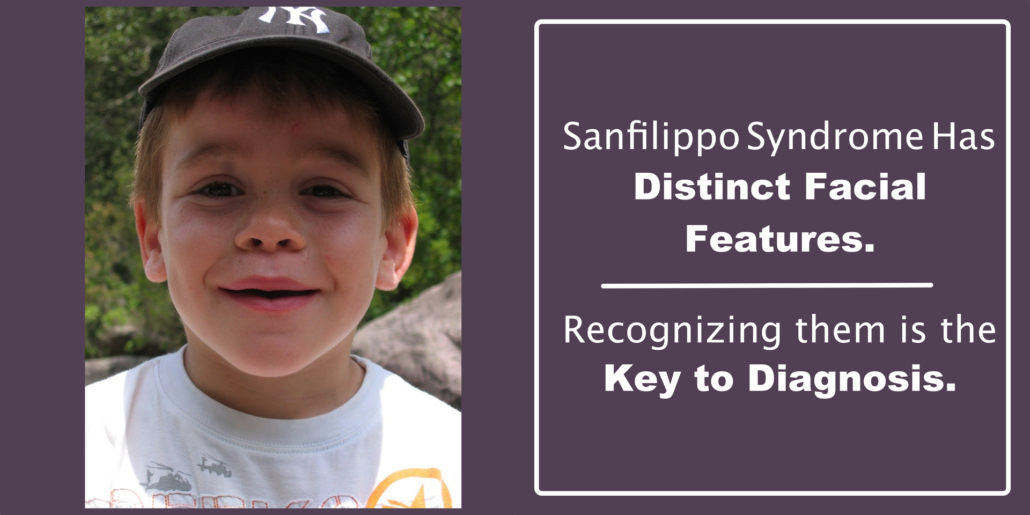

A self-learning algorithm created by US-Israelian company FDNA identified rare genetic syndromes with high accuracy following training on tens of thousands of real-world patient facial images, the bio-IT experts reported in Nature Medicine. Though further research is needed to refine the AI’s identifications and to compare its results with other diagnostic approaches the invasive technology is another challenge to the Charter of Fundamental Rights. On the other hand, the distinct facial features symptomatic of many various genetic syndromes can help clinicians to diagnose and therapeutically target rare syndromes earlier than possible today. Today, a diagnosis of an orphan disease takes five years on average.

Yaron Gurovich and colleagues trained a deep learning algorithm using over 17,000 facial images of patients whose diagnoses span hundreds of distinct genetic syndromes. The images used in this study were taken from a community-driven platform, onto which clinicians uploaded images of patients’ faces. The authors tested the AI’s performance with two independent test sets, each containing facial images of hundreds of patients that had previously been analysed by clinical experts. For each test image, the AI proposed an ordered list of potential syndromes. With both sets, the AI successfully proffered the correct syndrome among its top 10 suggestions around 90% of the time, and outperformed clinical experts in three separate experiments. Although this study used relatively small test sets and involved no direct comparisons with other existing identification methods or human experts, these results suggest that AI could potentially aid the prioritisation and diagnosis of rare genetic syndromes in the clinic.

The authors note that because individuals’ facial images are sensitive but easily accessible data, care must be taken to prevent discriminatory abuse of this technology.